Nico's Homepage

Sign Language Recognition

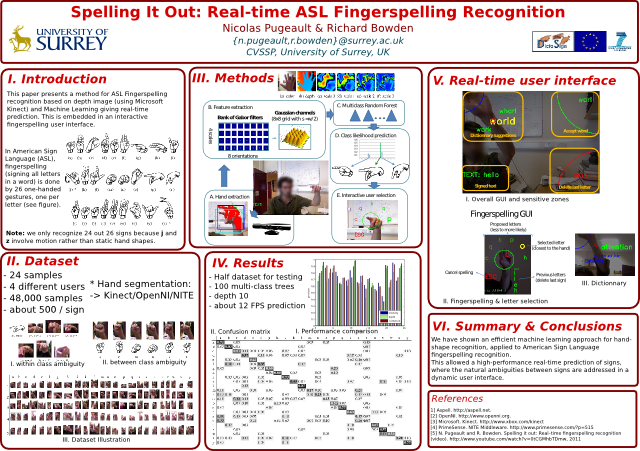

Recently, I have been looking at sign language recognition, and more specifically the recognition of finger-spelling in American Sign Language (ASL). Fingerspelling is a form of sign language where each sign correspond to a letter of the alphabet. I have developped an application for real-time fingerspelling using a Kinect+OpenNI+NITE, based on Gabor responses of depth images. The ambiguity between letters designated by similar hand shapes is resolved by an interactive interface presenting the plausible matches in a semi-circle around the user's hand, allowing him to select the correct letter by a small movement of the hand in its direction.

Dataset

We have now released two datasets containing both depth and intensity images for ASL fingerspelling letters. You can get them here, along with baseline performance of our algorithm: *ASL FINGERSPELLING DATASETS*Video

This work is part of the EU--project Dicta-Sign. Acknowledgements: Richard Bowden.

References

[1] Pugeault, N., and Bowden, R. (2011).

Spelling It Out: Real-Time ASL Fingerspelling Recognition

In Proceedings of the 1st IEEE Workshop on Consumer Depth Cameras for Computer Vision, jointly with ICCV'2011.

(pdf)